How to Use GPU in Docker on Linux

Introduction

Docker has revolutionized how developers build and deploy applications — but by default, containers don’t have access to your GPU. If you’re working with AI, machine learning, video processing, or 3D rendering, you’ll want to use your GPU inside Docker containers.

In this guide, we’ll show you how to set up and use your GPU in Docker on Linux using the NVIDIA Container Toolkit.

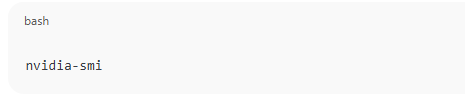

Step 1: Check GPU and Drivers

First, verify that your Linux system detects the GPU correctly. Run:

nvidia-smi

If you see your GPU details (like model and driver version), everything is working fine. If not, install the latest NVIDIA drivers:

sudo apt install nvidia-driver-###

(Replace ### with the latest version number.)

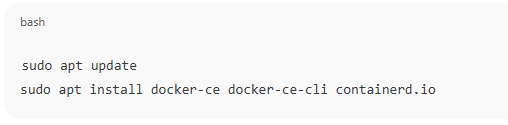

⚙️ Step 2: Install Docker

If Docker isn’t installed yet, use the following commands:

sudo apt update

sudo apt install docker-ce docker-ce-cli containerd.io

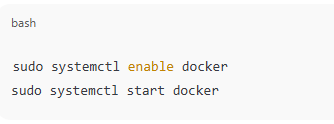

Enable and start Docker:

sudo systemctl enable docker

sudo systemctl start docker

Step 3: Install NVIDIA Container Toolkit

This toolkit enables Docker to communicate with the GPU.

Add the NVIDIA package repository:

distribution=$(. /etc/os-release;echo $ID$VERSION_ID)

curl -s -L https://nvidia.github.io/libnvidia-container/gpgkey | sudo apt-key add -

curl -s -L https://nvidia.github.io/libnvidia-container/$distribution/libnvidia-container.list | sudo tee /etc/apt/sources.list.d/nvidia-container-toolkit.list

Now install the toolkit:

sudo apt update

sudo apt install -y nvidia-container-toolkit

Restart Docker to apply the changes:

sudo systemctl restart docker

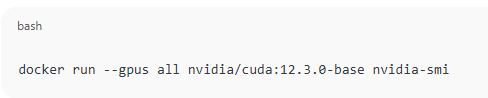

Step 4: Run a GPU-Enabled Docker Container

You can now launch a container with GPU access:

docker run --gpus all nvidia/cuda:12.3.0-base nvidia-smi

If everything’s configured correctly, you’ll see the same GPU info inside the container as on your host system.

Step 5: Limit GPU Usage (Optional)

You can restrict which GPUs a container can use:

docker run --gpus '"device=1"' nvidia/cuda:12.3.0-base nvidia-smi

Or specify the number of GPUs:

docker run --gpus 2 nvidia/cuda:12.3.0-base nvidia-smi

Step 6: Use in Docker Compose

To use GPU support in Docker Compose, add:

services:

app:

image: nvidia/cuda:12.3.0-base

deploy:

resources:

reservations:

devices:

- capabilities: [gpu]

Then run:

docker compose up

✅ Conclusion

By enabling GPU support in Docker, you can run AI models, deep learning workloads, and video rendering directly inside containers. With the NVIDIA Container Toolkit, Docker seamlessly integrates with your GPU hardware, giving you the power of accelerated computing in a portable environment.

How to Use GPU in Docker on Linux (F.A.Q)

Do I need an NVIDIA GPU to use GPU in Docker?

Yes. The NVIDIA Container Toolkit currently supports only NVIDIA GPUs.

Can I use AMD GPUs in Docker?

Yes, but you’ll need ROCm (Radeon Open Compute) support, which is less common and requires different setup steps.

Is GPU passthrough available for Docker in WSL2?

Yes. With the latest WSL2 updates, you can use --gpus all if you have an NVIDIA GPU and CUDA installed on Windows.

0 Comments